What are AI agents? Explained simply.

Imagine telling ChatGPT to search across different stores to find a type of jacket you saw today. An Agent is like giving ChatGPT access to…

Imagine telling ChatGPT to search across different stores to find a type of jacket you saw today. An Agent is like giving ChatGPT access to a computer.

tl;dr

Imagine an LLM (ChatGPT, Gemini, Claude, etc) that can interact with everything on a computer (depends on the type of agent though).

Imagine starting a chatbot conversation with something along the lines of “You are an Agent. You have access to tools. You have varying levels of memory access. Your main functionalities are; searching the web, reading and referencing emails, etc. The tools you have access to are X, Y, Z”, and then having a conversation with that chatbox. It would start to act like an “agent”.

Agents in simple terms are programs that run an infinite loop, with an LLM(maybe more) at the center of it, that has access to tools, memory, and more, and the agent does whatever you command it to them.

Agents are running programs that have AI (whether hosted model locally or calling a foundation model through an API) that can plan and use tools and take actions against an environment.

If you want a mental model that might click, imagine giving ChatGPT a physical presence in our digital world — arms to reach into documents, legs to navigate through file systems, and senses to perceive and interact with our digital tools (scary). It’s a bit like watching the Netflix show Pantheon, where they explore the fascinating (and sometimes unsettling) concept of digitized human consciousness. While the show portrays uploaded minds navigating digital spaces through sophisticated UIs, it’s not too far off from how modern AI agents operate — they’re digital entities empowered to interact with our computational world.

I know the physical body analogy might seem a bit out there (and maybe even makes some of us uncomfortable), but it helps bridge the gap between the abstract concept of AI and the very real ways agents interact with our digital environment. Just as our bodies give us agency in the physical world, these tools and APIs/interfaces give AI agents agency in the digital realm. When I watch my email organization agent deftly navigate through folders, categorize messages, and set up new organizational systems, I can’t help but see those metaphorical “arms and legs” at work. The new Claude agent that can take full-compute control (mouse, keyboard, etc) is eery like this too.

Looking at this LangChain diagram, I can’t help but be amazed at how far we’ve come in AI engineering. LangChain’s architecture captures well what makes agents work — they’re essentially orchestrators of a workflow between memory, tools, and planned actions, akin to how we humans work. We plan we have access to tools and memory, and based on all that, we take action. It feels eery to talk about but it is just being explicit in how we do things, being very programmer-esk and breaking it down into concrete components.

What fascinates me most is how this architecture mirrors our own cognitive processes. Just as we store memories (both short and long-term), use tools (from smartphones to notebooks), and plan our actions, agents follow a similar pattern. The key difference? They can do it at a scale and speed that still amazes me every time I deploy one or use multiple agents to do a task.

The rising trend in Google searches for “AI Agent” isn’t just a spike in curiosity — it represents a fundamental shift in how we’re thinking about AI applications. We’re moving from static, single-purpose AI models to dynamic, interactive systems that can actually do things in our digital world. It’s like we’ve finally given AI its hands and feet to walk around and interact with our digital environment.

Email Organizer Agent Example (Narrow Task).

Here is an example of what it might look like if you have an “AI Email Agent” and ask it to organize your email and automatically assign labels.

Let’s break down this email organization agent diagram I’ve sketched out — it’s an example of how agents might operate in practice. I chose email organization because it’s something we all struggle with (my inbox is nodding in agreement), and it showcases the power of agents in handling complex, multi-step tasks.

What I find particularly elegant about this flow is how it mimics the way a human assistant would approach the task. Start by understanding what’s there (Analyze Current Structure), look for patterns (perhaps those newsletter subscriptions I keep meaning to organize), design a solution, and then carefully validate before making changes. It’s methodical yet adaptable — exactly what we need in an AI assistant.

The tools and memory components are crucial here. The Email API isn’t just for fetching messages; it’s the agent’s hands for actually making changes. The Pattern Analysis tool is like giving the agent a pair of analytical lenses to see trends we might miss in our daily email chaos. And that Historical Labels section in memory? That’s the agent learning from past organizational decisions, much like how a human assistant would develop intuition over time.

What excites me most about this architecture is its potential for growth. Today it’s organizing emails, but tomorrow it could be managing our entire digital lives, adapting and learning from our preferences along the way.

A Glimpse in the past (November 2023 to be exact).

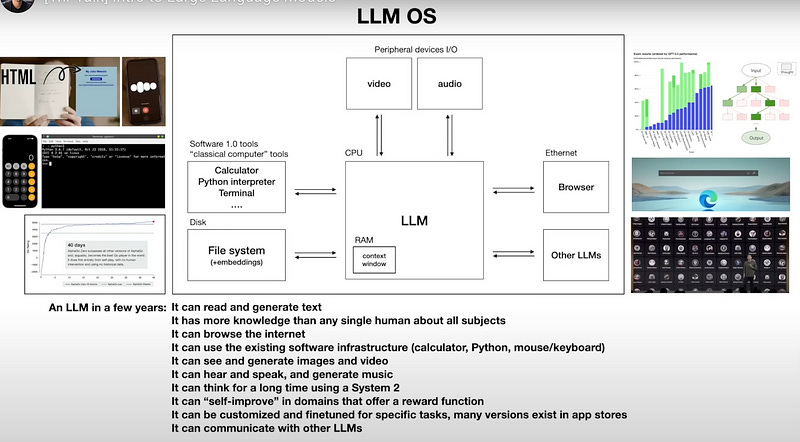

Looking at Karpathy’s LLM OS diagram from 2023 seems like he had a strong conviction on the future directions that products built around LLMs were going in. I remember watching this presentation when it first came out(it has only been 1 year but a lot of changes in a very short time span in this space as of late), and like many others, I found the operating system analogy intriguing but perhaps a bit ambitious. Fast forward to today, as I build and deploy AI agents, I realize just how prescient this vision was.

What strikes me most about this visualization is how it eerily mirrors what we’re actually building with modern AI agents. The email organization agent we just explored isn’t just a simple automation tool — it’s essentially running on this exact architecture that Karpathy described. The context window he emphasized as “finite precious resource” is exactly what we grapple with when designing agents that need to juggle multiple pieces of information, just like our email agent managing historical labels and current email contents.

The parallels between traditional operating systems and this “LLM OS” become even more fascinating when you consider our agent architecture. Just as an OS coordinates resources and handles I/O operations, our agents orchestrate tools, manage memory, and coordinate actions. That file system with embeddings? It’s essentially what powers our agent’s long-term memory. The CPU with its context window? That’s where our agent processes and plans its next moves.

What I find particularly mind-bending is how this concept has evolved from theoretical to practical in just a couple of years. When Karpathy talked about “Software 1.0 tools” like calculators and Python interpreters interfacing with the LLM, it might have seemed like a far-off vision. Yet here we are, building agents that casually use these exact tools to solve real-world problems. Our email organization agent example is living proof — it’s interfacing with email APIs, managing file systems, and coordinating multiple tools, just as predicted.

The beauty of this architecture becomes apparent when you realize it’s not just about having a smart chatbot — it’s about having a system that can truly orchestrate and execute tasks in our digital world. It’s the difference between having a consultant who can give you advice about organizing your emails and having an assistant who can actually roll up their sleeves and do the organizing for you.

This vision of an “LLM OS” helps explain why agents are so powerful — they’re not just programs; they’re becoming something akin to operating systems for AI-human interaction. And similar to how Linux spawned countless distributions, we’re seeing a proliferation of specialized agents built on top of foundation models, each tailored to specific needs and use cases.

The future Karpathy painted is unfolding before our eyes, and our email organization agent example is just one small window into this emerging world. As someone building in this space, I find it both humbling and exciting to see these predictions materialize into practical applications that can genuinely make our digital lives better.

Conclusion

As someone who’s been deep in the trenches of AI development lately, I can’t help but feel we’re at a pivotal moment in the evolution of AI agents and multi-agent workflows/use cases. They’re not just chatbots with extra features — they’re the beginning of truly interactive AI systems that can understand, plan, and act in our digital world.

The beauty of agents lies in their adaptability and potential for growth. Each component — whether it’s memory management, tool integration, or action planning — can be enhanced and expanded. As these systems evolve, they’ll become increasingly sophisticated in understanding our needs and executing complex tasks.

What keeps me up at night (in the best and worst way possible) is imagining where this technology is headed. The email organization agent we explored is just the tip of the iceberg. As we continue to develop more sophisticated tools and memory systems, and as language models become even more capable, the possibilities are boundless.

Remember though — agents aren’t magic. They’re carefully engineered systems that require thoughtful design and implementation. But when done right, they represent a significant step toward making AI not just intelligent, but genuinely helpful in our daily lives.

The future of AI isn’t just about smarter models — it’s about models that can actually do things. And agents are leading that charge, one tool, one memory, and one action at a time.